A post about technology on the technology community?

What year is this?

Yeah, I didn’t see Elon Musk, Trump, or AI mentioned at all. What’s happening?

Ah. It’s… Six times faster than my sdd that was already fast. This runs faster than some ram. God damn.

Which is the ultimate goal I think, if your main storage is already as fast as RAM then you just don’t need RAM anymore and also can’t run out of memory in most cases since the whole program is functionally already loaded.

I’m sure there are data science/center people that can appreciate this. For me all I’m thinking is how hot it runs and how much I wish soon 20TB SSDs would be priced like HDDs

nah datacenters care more about capacity or iops, throughput is meaningless, since you’ll always be bottlenecked by network

Not necessarily if you run workloads within the datacenter? Surely that’s not that rare, even if they’re mostly for hosting web services.

Yeah but 15 GB/s is 120 gbit. Your storage nodes are going to need more than 2x800gbit if you want to take advantage of the bandwidth once you start putting more than 14 drives in. Also, those 14 drives probably won’t have more than 30M iops. Your typical 2U storage node is going to have something like 24 drives, so you’ll probably be bottlenecked by bandwidth or iops no matter if you put in 15GB/s drives or 7GB/s drives.

Maybe it makes sense these days, I haven’t seen any big storage servers myself, I’m usually working with cloud or lab environments.

If what you’re doing is database queries on large datasets, the network speed is not even close to the bottleneck unless you have a really dumbly partitioned cluster (in which case you need to fire your systems designer and your DBA).

There are more kinds of loads than just serving static data over a network.

Science stuff though …

I work in bioinformatics. The faster the hard drive the better! Some of my recent jobs were running some poorly optimized code and would turn 1tb of data into 10tb of output. So painful to run with 36 replicates.

Are you hiring ^^ ?

Love that kind if stuff.

A lot are moving through software defined networking which runs at RAM speeds.

But typically responsiveness is quite important in a virtualized environment.

InfiniBand could run theoretically at 2400gbps which is 300GB/s.

Agreed. I’d happily settle for 1GB/s, maybe even less, if I could get the random seek times, power usage, durability, and density of SSDs without paying through the nose.

I’d be more than happy with 1GB/s drives for storage. I’d be happy with SATA3 SSD speeds. I’d be happy if they were still sized like a 2.5" drive. USB4 ports go up to 80Gb/s. I’d be happy with an external drive bay with each slot doing 1 GB/s

The trouble with ridiculous R/W numbers like these is not that there’s no theoretical benefit to faster storage, it’s that the quoted numbers are always for sequential access, whereas most desktop workloads are more frequently closer to random, which flash memory kinda sucks at. Even really good SSDs only deliver ~100MB/sec in pure random access scenarios. This is why you don’t really feel any difference between a decent PCIe 3.0 M.2 drive and one of these insane-o PCI-E 5.0 drives, unless you’re doing a lot of bulk copying of large files on a regular basis.

It’s also why Intel Optane drives became the steal of the century when they went on clearance after Intel abandoned the tech. Optane is basically as fast in random access as in sequential access, which means that in some scenarios even a PCIe 3.0 Optane drive can feel much, much snappier than a PCIe 4 .0 or 5.0 SSD that looks faster on paper.

which flash memory kinda sucks at.

Au contraire, flash is amazing at random R/W compared to all previous non-volatile technologies. The fastest hard drives can do what, 4MB/s with 4k sectors, assuming a quarter rotation per random seek? And that’s still fantastic compared to optical media, which in turn is way better than tape.

Obviously, volatile memory like SDRAM puts it to shame, but I’m a pretty big fan of being able to reboot.

Fair point. My thrust was more that the reason why things like system boot times and software launch speeds don’t seem to benefit as much as they seem like they should when moving from, say, a good SATA SSD (peak R/W speed: 600 MB/sec) to a fast m.2 that might have listed speeds 20+ times faster, is that QD1 performance of that m.2 drive might only be 3 or 4 times better than the SATA drive. Both are a big step up from spinning media, but the gap between the two in random read speed isn’t big enough to make a huge subjective difference in many desktop use cases.

Why was Optane so good with random access? Why did Intel abandon the tech?

didn’t sell well. I assume if they were able to combine it with todays need for NVRAM on a GPU for AI they would have gotten it sold a bunch. I am surprised we don’t see “pcie ram expansion pack” for the GPUs from nvidia yet

This is all a lot easier created than it is to make the software for

Intel became broke and they had to cut it.

Agree 1 lane of pci4.0 per M.2 SSD is enough.

Give me more slots instead.

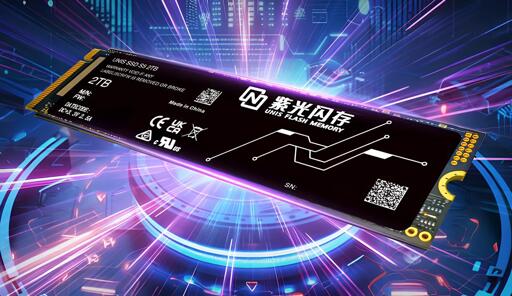

Not to forget that I’d be very cautious about the stratosferic claims of a never heard before chinese manufacturer…

So no need for RAM when?

15 GB/s is about on par with DDR3-1866. High-end DDR5 caa do well over triple that.

And that’s not to mention the latency, which is the real point of RAM.

One of the biggest bottlenecks in many workloads is latency. Cache miss and the CPU stalls waiting for main memory. Flash storage, even on an nvme bus is two orders of magnitude slower than ram.

For example L3 cache takes approximately 10-20 nano seconds, ram takes closer to 100 nano seconds, nvme flash is more than 10,000 nano seconds (>10 microseconds).

Depending on your age you may remember the transition from hard drives to ssds. They could make a machine feel much snappier. Early PC ssds weren’t significantly faster throughput than hard drives (many now are even slower writing when they run out of SLC cache), what they were is significantly lower latency.

As an aside, Intel and Microns 3d xpoint was super interesting technically. It was capable of < 5000 nano seconds in early generation parts, meaning it sat in between DDR ram and flash.

Arent there nv-ram dimms using a sort of hybrid?

You want RAM because you don’t want to have your computer store and constantly read/write to through TBs of temporary/useless data constantly. You need a form of cache for even faster read/write times.

Gigabytes of L3 cache when

Gigabytes plural? Maybe a while. Gigabyte singular? Already a thing. AMD EPYC 9684X(https://www.amd.com/en/products/processors/server/epyc/4th-generation-9004-and-8004-series/amd-epyc-9684x.html)

Well intel optane failed but you can use swap as RAM anytime you want!

Never

IMO another example of pushing numbers ahead of what’s actually needed, and benefitting manufacturers way more than the end user. Get this for bragging rights? Sure, you do you. Some server/enterprise niche use case? Maybe. But I’m sure that for 90% of people, including even those with a bit more demanding storage requirements, a PCIe 4 NVMe drive is still plenty in terms of throughput. At the same time SSD prices have been hovering around the same point for the past 3-4-5 years, and there hasn’t been significant development in capacity - 8 TB models are still rare and disproportionately expensive, almost exotic. I personally would be much more excited to see a cool, efficient and reasonably priced 8/16 TB PCIe 4 drive than a pointlessly fast 1/2/4 TB PCIe 5.

I never understood this kind of objection. You yourself state that maybe 10% of users can find some good use for this - and that means that we should stop developing the technology until some arbitrary, higher threshold is met? 10% of users is an incredibly big amount! Why is that too little for this development to make sense?

I’m not saying “don’t make progress”, I’m saying “try to make progress across the board”.

That’s not how R&D works. It’s really rare to have “progress across the board”, usually you have incremental improvements in specific areas that come together to an across-the-board improvement.

So we’d be getting improvements slower since there’s much less profit from individual advancements, as they can’t be released. What’s the advantage here?

How many IOPS?

I wonder why they’re not using TB/s like 14.9TB/s

Edit: GB/s

Because those are megabytes, not gigabytes

Oh good point. 14.9GB/s

probably a holdover from the sata days, or simply because it’s nice to show the number doubling into tens of thousands

Assuming you meant GB/s, not TB/s, I think it’s for the sake of convenience when doing comparisons - there are still SATA SSDs around and in terms of sequential reads and writes those top out at what the interface allows, i.e. 500-550 MB/s.

Yeah, i meant GB/s. Thanks for pointing that out.

Because bigger number better.

That’s basically how all storage speeds are handled. HDDs are around 300MB/s, current NVMEs are around 7000MB/s, etc. Keep everything in the same scale for easier comparison.

So computer illiterate don’t think it’s a smaller number