Shows over go home

some device updated itself overnight and the interface color palette was completely different/ugly when it restarted. of course the settings to fix that are behind several layers of counter-intuitive puzzle boxes, and the settings search feature is bringing up every other setting for “color” or “theme” that doesn’t relate to what im looking for.

so i start aearching online, and i swear to god, the first couple of hits with promising page titles seem to have generative content directing me to access menus and settings that don’t exist.

yesterday, i was directed to run some content I’d written through an LLM to standardize readability to our organizational recommendation. never used one, so i found the one that wouldn’t make me generate my own user account (copilot).

i give it the prompt, it asks for the attachment, i submit, it claims to be done and tells me i can get a downloadable version. that sounds good, because i don’t see the full version in the tesponse. i ask for a downloadable version and it says it will give that to me right away. a minute later nothing, i ask how to download it and it tells me to click the download link above. there isn’t one. i figure maybe its a security / browser thing with firefox, so i go through this again with edge, so it can all be Microsoft.

exact same thing happens. keeps telling me it has completed the task and referring me to click a download link that does not exist.

after scouring the interface for any possible place it could be, i search that issue and apparently this is a thing copilot and some llms do. they say they did the thing and refer to download links that don’t exist.

this is the shittiest technology ever deployed.

The LLMs are built to give you the answer you want to hear, if you ask for something, they will happily tell you they are providing it to you, even when it is literally beyond their capabilities. I once spent a despressingly long amount of time wrestling with one to get a 2000 word response, just Lorem Ipsum type stuff, but it was completely incapable of actually giving me the correct amount of text, but kept saying it was and apologising while not actually providing me what I asked for. Turns out that particular model had a roughly 750 word limit on responses, but they don’t actually tell you what they can and cant’ do, because you can’t sell them as a magical cure all solution to all tech problems if they actually lay out the very specific things that they can actually do instead of pretending they can do anything.

Fyi you can open word and type lorem=(paragraph, sentences) and it will give you all the lorem ipsum text you need.

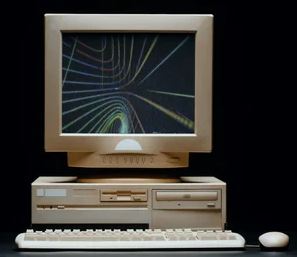

It always starts the same way …

- Certainly I can …

- Certainly! …

- Exactly, you …

- Yes …

Yeah if I wanted a task to be totally bungled and get told everything actually went fine; I’d ask for an apprentice

I tune out if anyone quotes an llm response to me. At work I have to smile and nod but outside of that I’ll usually tell people I do not accept it as a valid response

step 1 make everyone post on a few corp domains, step 2 flood what remains of the internet with AI slop

also shinigami eyes for ai slop when

maybe if people notice the new versions of chatgpt don’t have any significant improvements the fad will pass

maybe they will flag hexbear because of this, they can not train their AI on shit(posts):

hopefully we are contributing to this outcome through badposting

hopefully we are contributing to this outcome through badposting

western internet is dead

Thanks Mr. Fuckface ! For ruining everything forevvver

These Tiktok-clone AI silo Videos will not kill the internet (I hope not please)

That play button overlay made him look like a priest to me lol

Honestly thats how some of the sora 2.0 zealots are treating him