“The real benchmark is: the world growing at 10 percent,” he added. “Suddenly productivity goes up and the economy is growing at a faster rate. When that happens, we’ll be fine as an industry.”

Needless to say, we haven’t seen anything like that yet. OpenAI’s top AI agent — the tech that people like OpenAI CEO Sam Altman say is poised to upend the economy — still moves at a snail’s pace and requires constant supervision.

Correction, LLMs being used to automate shit doesn’t generate any value. The underlying AI technology is generating tons of value.

AlphaFold 2 has advanced biochemistry research in protein folding by multiple decades in just a couple years, taking us from 150,000 known protein structures to 200 Million in a year.

Thanks. So the underlying architecture that powers LLMs has application in things besides language generation like protein folding and DNA sequencing.

alphafold is not an LLM, so no, not really

A Large Language Model is a translator basically, all it did was bridge the gap between us speaking normally and a computer understanding what we are saying.

The actual decisions all these “AI” programs do are Machine Learning algorithms, and these algorithms have not fundamentally changed since we created them and started tweaking them in the 90s.

AI is basically a marketing term that companies jumped on to generate hype because they made it so the ML programs could talk to you, but they’re not actually intelligent in the same sense people are, at least by the definitions set by computer scientists.

What algorithm are you referring to?

The fundamental idea to use matrix multiplication plus a non linear function, the idea of deep learning i.e. back propagating derivatives and the idea of gradient descent in general, may not have changed but the actual algorithms sure have.

For example, the transformer architecture (that is utilized by most modern models) based on multi headed self attention, optimizers like adamw, the whole idea of diffusion for image generation are I would say quite disruptive.

Another point is that generative ai was always belittled in the research community, until like 2015 (subjective feeling would need meta study to confirm). The focus was mostly on classification something not much talked about today in comparison.

Wow i didn’t expect this to upset people.

When I say it hasn’t fundamentally changed from an AI perspective i mean there is no intelligence in artificial Intelligence.

There is no true understanding of self, just what we expect to hear. There is no problem solving, the step by steps the newer bots put out are still just ripped from internet search results. There is no autonomous behavior.

AI does not meet the definitions of AI, and no amount of long winded explanations of fundamentally the same approach will change that, and neither will spam downvotes.

Btw I didn’t down vote you.

Your reply begs the question which definition of AI you are using.

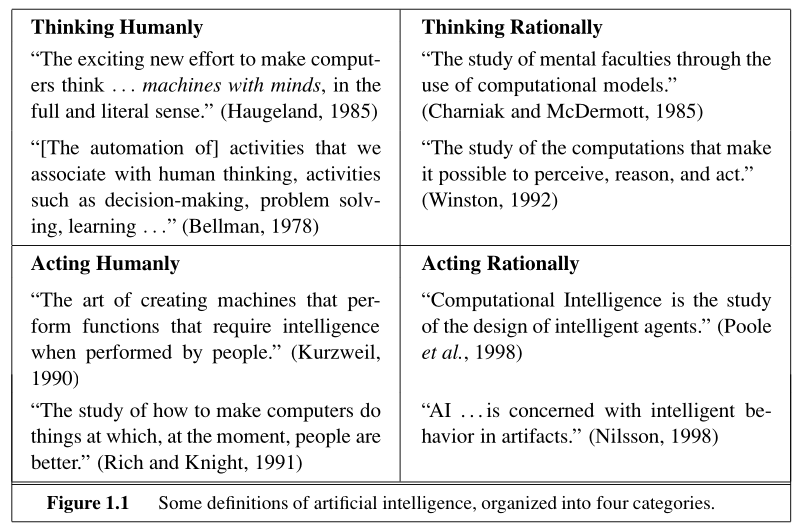

The above is from Russells and Norvigs “Artificial Intelligence: A Modern Approach” 3rd edition.

I would argue that from these 8 definitions 6 apply to modern deep learning stuff. Only the category titled “Thinking Humanly” would agree with you but I personally think that these seem to be self defeating, i.e. defining AI in a way that is so dependent on humans that a machine never could have AI, which would make the word meaningless.

You are correct that AlphaFold is not an LLM, but they are both possible because of the same breakthrough in deep learning, the transformer and so do share similar architecture components.

And all that would not have been possible without linear algebra and calculus, and so on and so forth… Come on, the work on transformers is clearly separable from deep learning.

That’s like saying the work on rockets is clearly separable from thermodynamics.

I’ve been working on an internal project for my job - a quarterly report on the most bleeding edge use cases of AI, and the stuff achieved is genuinely really impressive.

So why is the AI at the top end amazing yet everything we use is a piece of literal shit?

The answer is the chatbot. If you have the technical nous to program machine learning tools it can accomplish truly stunning processes at speeds not seen before.

If you don’t know how to do - for eg - a Fourier transform - you lack the skills to use the tools effectively. That’s no one’s fault, not everyone needs that knowledge, but it does explain the gap between promise and delivery. It can only help you do what you already know how to do faster.

Same for coding, if you understand what your code does, it’s a helpful tool for unsticking part of a problem, it can’t write the whole thing from scratch

LLMs could be useful for translation between programming languages. I asked it to recently for server code given a client code in a different language and the LLM generated code was spot on!

I remain skeptical of using solely LLMs for this, but it might be relevant: DARPA is looking into their usage for C to Rust translation. See the TRACTOR program.

It is fun to generate some stupid images a few times, but you can’t trust that “AI” crap with anything serious.

I was just talking about this with someone the other day. While it’s truly remarkable what AI can do, its margin for error is just too big for most if not all of the use cases companies want to use it for.

For example, I use the Hoarder app which is a site bookmarking program, and when I save any given site, it feeds the text into a local Ollama model which summarizes it, conjures up some tags, and applies the tags to it. This is useful for me, and if it generates a few extra tags that aren’t useful, it doesn’t really disrupt my workflow at all. So this is a net benefit for me, but this use case will not be earning these corps any amount of profit.

On the other end, you have Googles Gemini that now gives you an AI generated answer to your queries. The point of this is to aggregate data from several sources within the search results and return it to you, saving you the time of having to look through several search results yourself. And like 90% of the time it actually does a great job. The problem with this is the goal, which is to save you from having to check individual sources, and its reliability rate. If I google 100 things and Gemini correctly answers 99 of those things accurate abut completely hallucinates the 100th, then that means that all 100 times I have to check its sources and verify that what it said was correct. Which means I’m now back to just… you know… looking through the search results one by one like I would have anyway without the AI.

So while AI is far from useless, it can’t now and never will be able to be relied on for anything important, and that’s where the money to be made is.

Even your manual search results may have you find incorrect sources, selection bias for what you want to see, heck even AI generated slop, so the AI generated results will just be another layer on top. Link aggregating search engines are slowly becoming useless at this rate.

While that’s true, the thing that stuck out to me is not even that the AI was mislead by itself finding AI slop, or even somebody falsely asserting something. I googled something with a particular yea or no answer. “Does X technology use Y protocol”. The AI came back with “Yes it does, and here’s how it uses it”, and upon visiting the reference page for that answer, it was documentation for that technology where it explained very clearly that x technology does NOT use Y protocol, and then went into detail on why it doesn’t. So even when everything lines up and the answer is clear and unambiguous, the AI can give you an entirely fabricated answer.