Style cannot be copyrighted.

And if somehow copyright laws were changed so that it could be copyrighted it would be a creative apocalypse.

Not style. But they had to train that AI on ghibli stuff. So… Did they have the right to do that?

It depends on where they did it, but probably yes. They had the right to do it in Japan, for example.

Training doesn’t involve copying anything, so I don’t see why they wouldn’t. You need to copy something to violate copyright.

There is an argument that training actually is a type of (lossy) compression. You can actually build (bad) language models by using standard compression algorithms to ”train”.

By that argument, any model contains lossy and unstructured copies of all data it was trained on. If you download a 480p low quality h264-encoded Bluray rip of a Ghibli movie, it’s not legal, despite the fact that you aren’t downloading the same bits that were on the Bluray.

Besides, even if we consider the model itself to be fine, they did not buy all the media they trained the model on. The action of downloading media, regardless of purpose, is piracy. At least, that has been the interpretation for normal people sailing the seas, large companies are of course exempt from filthy things like laws.

Stable Diffusion was trained on the LIAON-5B image dataset, which as the name implies has around 5 billion images in it. The resulting model was around 3 gigabytes. If this is indeed a “compression” algorithm then it’s the most magical and physics-defying ever, as it manages to compress images to less than one byte each.

Besides, even if we consider the model itself to be fine, they did not buy all the media they trained the model on.

That is a completely separate issue. You can sue them for copyright violation regarding the actual acts of copyright violation. If an artist steals a bunch of art books to study then sue him for stealing the art books, but you can’t extend that to say that anything he drew based on that learning is also a copyright violation or that the knowledge inside his head is a copyright violation.

You assume a uniform distribution. I’m guessing that it’s not. The question isn’t ”Does the model contain compressed representations of all works it was trained on”. Enough information on any single image is enough to be a copyright issue.

Besides, the situation isn’t as obviously flawed with image models, when compared to LLMs. LLMs are just broken in this regard, because it only takes a handful of bytes being retained in order to violate copyright.

I think there will be a ”find out” stage fairly soon. Currently, the US projects lots and lots of soft power on the rest of the world to enforce copyright terms favourable to Disney and friends. Accepting copyright violations for AI will erode that power internationally over time.

Personally, I do think we need to rework copyright anyway, so I’m not complaining that much. Change the law, go ahead and make the high seas legal. But set against current copyright laws, most large datasets and most models constitute copyright violations. Just imagine the shitshow if OpenAI was an European company training on material from Disney.

There’s a difference between lossy and lossless. You can compress anything down to a single bit if you so wish, just don’t expect to get everything back. That’s how lossy compression works.

It’s perfectly legal to compress something to a single bit and publish it.

Hell, if I take and publish the average color of any copyrighted image that is at least 24 bits. That’s lossy compression yet legal.

I hate lawyer speak with a passion

Everyone knows what we’re talking about here, what we mean, and so do you

And yet if one wishes to ask:

Did they have the right to do that?

That is inherently the realm of lawyer speak because you’re asking what the law says about something.

The alternative is vigilantism and “mob justice.” That’s not a good thing.

“In its suit, the Times alleges that, when prompted by users, ChatGPT sometimes spits out portions of its articles verbatim, or shares key parts of its content, such as findings uncovered through investigations by Times reporters, or product endorsements carefully researched and vetted by Wirecutter, an affiliate site.”

From: https://hls.harvard.edu/today/does-chatgpt-violate-new-york-times-copyrights/

In its suit, the Times alleges that

Emphasis added. Of course they’re going to claim their copyright was violated, they don’t have a case otherwise.

It remains to be seen how the case will be decided.

Lol did you even read the article you linked? OpenAI isn’t disputing the fact that their LLM spit out near-verbatim NY Times articles/passages. They’re only taking issue with how many times the LLM had to be prompted to get it to divulge that copyrighted material and whether there were any TOS violations in the process.

They’re saying that the NYT basically forced ChatGPT to spit out the “infringing” text. Like manually typing it into Microsoft Word and then going “gasp! Microsoft Word has violated our copyright!”

The key point here is that you can’t simply take the statements of one side in a lawsuit as being “the truth.” Obviously the laywers for each side are going to claim that their side is right and the other side are a bunch of awful jerks. That’s their jobs, that’s how the American legal system works. You don’t get an actual usable result until the judge makes his ruling and the appeals are exhausted.

If a fact isn’t disputed by either side in a case as contentious as this one, it’s much more likely to be true than not. You can certainly wait for the gears of “justice” to turn if you like, but I think it’s pretty clear to everyone else that LLMs are plagiarism engines.

Music would be gone forever lol

This is already a copyright apocalypse though isn’t it? If there is nothing wrong with this then where is the line? Is it okay for Disney to make a movie using an AI trained on some poor sap on Deviant Art’s work? This feels like copyright laundering. I fail to see how we aren’t just handing the keys of human creativity to only those with the ability to afford a server farm and teams of lawyers.

I would agree the cats out of the bag, so there may not be anything that can be done. The keys aren’t going to those who can afford a server farm, the door is wide open for anyone with a computer.

The interesting follow up to this is what Disney does to a model trained on their films. Sure lawyers, but how much will they actually be able to do?

With these sorts of things, it’s almost always the smaller guy that doesn’t reach as far that gets hurt, and not the big company.

deleted by creator

That and AI companies not giving a fuck about copyright. I don’t understand those articles.

I think you’re right about style. As a software developer myself, I keep thinking back to early commercial / business software terms that listed all of the exhaustive ways you could not add their work to any “information retrieval system.” And I think, ultimately, computers cannot process style. They can process something, and style feels like the closest thing our brains can come up with.

This feels trite at first, but computers process data. They don’t have a sense of style. They don’t have independent thought, even if you call it a “<think> tag”. Any work product created by a computer from copyrighted information is a derivative work, in the same way a machine-translated version of a popular fiction book is.

This act of mass corporate disobedience, putting distillate made from our collective human works behind a paywall needs to be punished.

. . .

But it won’t be. That bugs me to no end.

(I feel like my tone became a bit odd, so if it felt like the I was yelling at the poster I replied to, I apologize. The topic bugs me, but what you said is true and you’re also correct.)

I think it will be punished, but not how we hope. The laws will end up rewarding the big data holders (Getty, record labels, publishers) while locking out open source tools. The paywalls will stay and grow. It’ll just formalize a monopoly.

I think this might be hypocritical of me, but in one sense I think I prefer that outcome. Let those existing trained models become the most vile and untouchable of copyright infringing works. Send those ill-gotten corporate gains back to the rights holders.

What, me? Of course I’ve erased all my copies of those evil, evil models. There’s no way I’m keeping my own copies to run, illicitly, on my own hardware.

(This probably has terrible consequences I haven’t thought far enough ahead on.)

I understand the sentiment but I think it’s foolhardy.

- The job losses still occur

- The handful of companies able to pay for the data have a defecto monopoly (Google, OpenAI)

- That monopoly is used to keep the price tag of state of the art AI tools above consumer levels (your boss can afford to replace you but you can’t afford to compete against him with the same tools).

And all that mostly benefiting the data holders and big ai companies. Most image data is on platforms like Getty, Deviant Art, Instagram, etc. It’s even worse for music and lit, where three record labels and five publishers own most of it.

If we don’t get a proper music model before the lawsuits pass, we will never be able to generate music without being told what is or isn’t okay to write about.

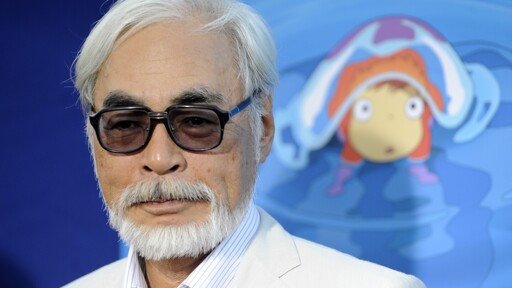

I’m not sure pissing off Miyazaki is a great move. He’s an old Japanese man who is famously so bitter that when he chain smokes he gives the cigarettes cancer, communicates largely in contemplative one-liners, and is known to own precisely one sword. And he has a beard. We’ve all seen this movie; we know how that kind of thing ends.

Had a beard. He clean shaved a couple weeks ago.

He did what?

Nature really is out of balance lately.

Uh-oh. If I was Altman, I would start running right now.

C’mon Ghibli, be as litigious as Nintendo and sue them into the ground

It’s so good at it. To the point where I assume they must have fed the model the bulk of whole movies.

High chance of the movies being pirated too

I mean for this is AI Art made, for shitposts. But yeah… how would AI know about ghibli style without “ripping” of images from the internet.

Another article the same but with the images its talking about:

I don’t follow ai development too much, so when I first saw these images didn’t know they were AI.

But to me something about these felt soulless, compared to snaps of ghibli movies.

Now knowing it’s AI explained it some more.

I bet the reason you feel that way cause you know it’s not ghibli who made it. The other part is, it’s a meme. Though seeing this art style everywhere makes it loose it’s charm and novelty. Instead of thinking all those movies they made. You get to think about 2 girls and 1 cup ghibli movie poster. That’s bad for business especially for ghibli. It’s the same thing that happen with Pixar art style.

I don’t see how this changes anything. Style isn’t copyrightable, so if anything it seems the least concern.

Characters or specific scenes, those are the really juicy bits

Edit: And of course still the general question of ingesting copyrighted inputs without license for other than private use

Is it possible to try this Giblification locally?

It shouldn’t be much of a problem using a gibli based model with img2img. I personally use forge as my main ui, models can be found on civitai.com . It’s easily possible, you just need a bit of vram and setting it up is more work. You might get more mileage by using controlnet in conjunction with img2img.