- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

“With fewer visits to Wikipedia, fewer volunteers may grow and enrich the content, and fewer individual donors may support this work.”

Archived version: https://archive.is/20251017020527/https://www.404media.co/wikipedia-says-ai-is-causing-a-dangerous-decline-in-human-visitors/

Personally, I have been moving the opposite way. There are so many bullshit websites, wading through them is a pain. Instead, I directly jump to Wikipedia

Same! I have Wikipedia pinned as the first search result on Kagi if there’s an entry

I was thinking of sharing a kagi duo plan with my dad, is it worth it? I currently use ecosia

I have been using it for about a year. There is zero advertisement anywhere. No sponsored posts. Just pure search. And they give you quite some good tools to derank or delist websites for you to define your own quality of search results, it’s very effective. They also go the extra mile of de-ranking websites with many trackers as that apparently correlates with SEO which normally doesn’t track with result quality. It’s a very refreshing take on the web. I love it :)

Edit: they also have an extension which allows you to search while being logged out using tokens which improves privacy, in case you want to go the extra mile.

I think so! I’m pretty sure they still give you 100 free searches to try it out, might be worth test driving before you pay for it. From what I understand it’s mostly Google on the back end with Kagi’s own algorithm. My favorite part about it is being able to rank how the results sort themselves based on sites you want to see more or less of, and some other pretty powerful filters. I definitely think it’s worth a shot if you’re curious.

I have moved the opposite way. I am noticing so much propaganda on Wikipedia that I would rather avoid it.

For example, this page, which is clearly written by Zionist bots, but somehow has edit protection.

More proof that AI ruins everything - if it was needed.

Well, you won’t ever be losing me, WP.

We got WordPress’s number one fan here.

I’ll be honest with you: I have absolutely no idea how to interpret that in this context.

Vanilla guess: WP = Winnie the Pooh.

Ah. I see.

There’s also Windows Phone

WP interpreted as “Winnie the Pooh” instead of “Wikipedia”?

Neither me

AI creating more poor thinkers is how I read this. Its like that outer limits episode where everyone is hooked up to all the answers mentally and one guy can’t have the implant. In the end he is the only who can find his own answers.

I’ve had this thought about heaven before. Like I assume you don’t go in with whatever limitations there are on your brain in your earthly form, which means the people that died longest ago will have this almost unapproachable level of knowledge, and it feels like that everyone would get to that level across eternity. Then what? It would be a space filled with everyone that knows everything. Would you even be you anymore? Wouldn’t it all be boring?

(I don’t believe in heaven)

Like almost every religious concept heaven falls apart almost immediately with even the slightest bit of scrutiny.

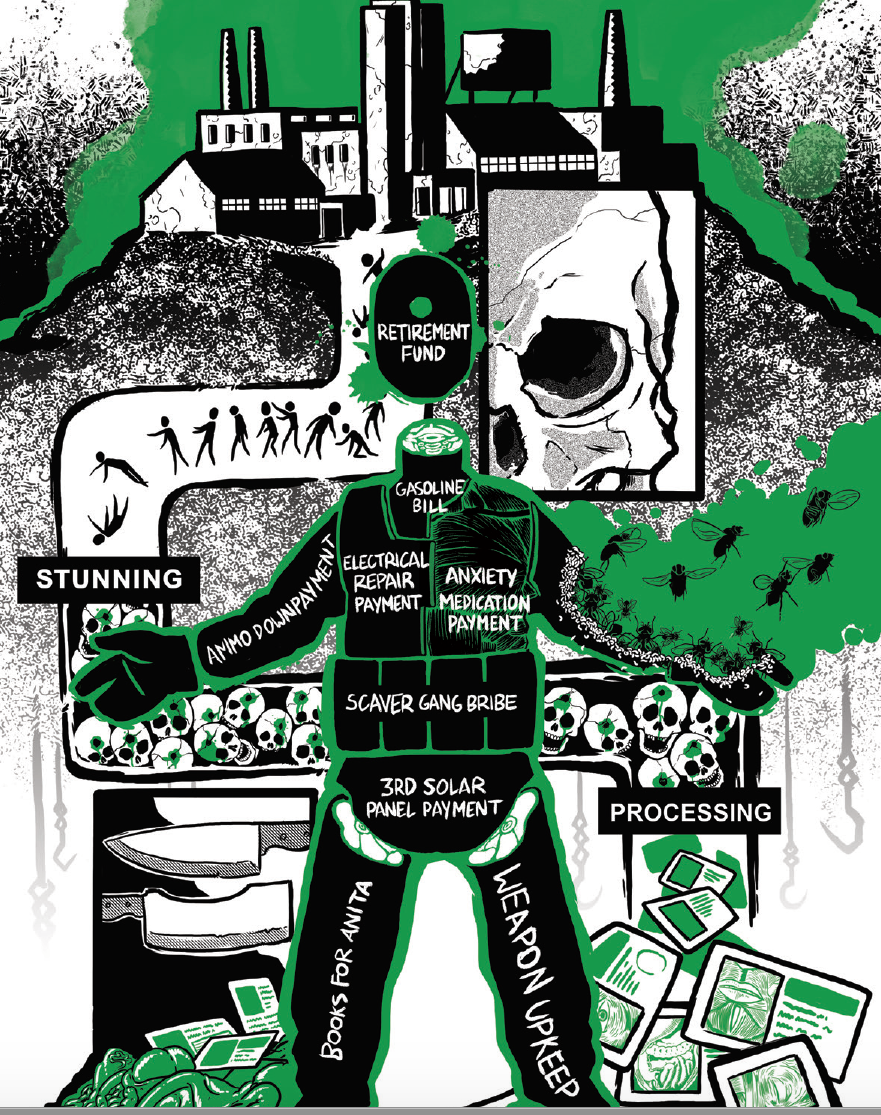

I cancelled my donations to wikipedia since the WMF announced they’d be using AI, and they gave me a really milquetoast bullshit response when I emailed them. Can’t trust such a precious, collaborative, human effort as Wikipedia to a bunch of anti-human robofuckers. 🤷♂️

Correct me if im wrong, but haven’t they since gone back on AI integration, and are no longer planning any, after backlash from their contributors?

Honestly, I don’t know. I stopped listening after they said they were going to.

Too little too late for me really; unless they replace the people who made that decision in the first place, that sort of anti-human, lazy, irresponsible sentiment will remain present in their leadership.

Why do you want to replace people that are capable of changing their minds giving input from other people?

I would say we need more of that kind of leadership.

I don’t know why backing down after massive backlash is being lauded if I’m being completely honest. I want to replace them because the decision in the first place to pollute Wikipedia at all with AI is so poor that I no longer trust them to make good choices.

I was following along when they initially announced it and while I personally think its an awful idea, it did seem to stem not from a profit motive but an intention to make Wikipedia easier to use and more effective, and when the vast majority of contributors and editors responded to tell Wikipedia that it was an atrocious idea and that they shouldn’t do it they listened and scrapped the plan.

My point being, isn’t it better as a whole that they’re willing to consider new things but will also listen to the feedback they get from their users and maintainers and choose not to implement ideas that are wildly unpopular?

Writing off all of Wikipedia (the most effective tool for collaborative knowledge collection in human history) just because they announced a well intentioned tool addition and then scrapped the plan when they realized it was unpopular and would likely degrade their platform seems short sighted at best imo.

I get that I’m being pigheaded, but unfortunately I don’t trust that it was done out of some purely good intention and naivety. Even if that was the case, then the people making that decision are gullible enough to fall for LLM hype and too irresponsible to be in charge of something that we both agree is an absolute marvel of our modern age, and a massive collaborative effort contributed to by so many hundreds of thousands of people, and used by so many millions more.

I totally understand that I’m being stubborn, but this isn’t a principle I’m willing to compromise on in this case!

That’s a problem across the board. Assuming AI does establish itself, all it’s training data dries up and we basically stagnate.

Also, in this weird inbetween phase until it is actually good, we’ve already generated so much bullshit that AI trains on the hallucinations of other AIs.

As some point we will train it on live data similar to how human babies are trained. There’s always more data.

And the AIs themselves can generate data. There have been a few recent news stories about AIs doing novel research, that will only become more prevalent over time.

Though, a big catch is that whatever is generated needs to be verified. The most recent story I’ve seen was the AI proposing the hypothesis of a particular drug increasing antigen presentation, which could turn cold tumors (those the immune system does not attack) into hot tumors (those the immune system does attack). The key news here is that this hypothesis was found to be correct, as an experiment has shown that said drug does have this effect. (link to Google’s press release)

The catch here is that I have not seen any info on how many hypotheses were generated to find this correct hypothesis. It doesn’t have to be perfect: research often causes a hypothesis to be rejected, even if proposed by a person rather than AI. However signal-to-noise is still important for how game changing it will be. Like in this blogpost it can fail to identify a solution at all, or even return incorrect hypotheses. You can’t simply use this data for further training the LLM, as it would only degrade the performance.

There needs to be a verification and filtering first. Wikipedia has played such a role for a very long time, where editors reference sources, and verify the trustworthiness of these sources. If Wikipedia goes under because of this, either due to a lack of funding or due to a lack of editors, a very important source will be lost.

It’s like the search summary problem, only worse. Before, people lost traffic already cause Google would try to answer the question with a snippet from your site; however, LLMs (or at least ChatGPT) are trained on Wikipedia, because it’s available data. With Reddit being first, Wikipedia being the second largest source of facts they used (at least, according to statista).

Side note, you’re not even supposed to source facts from Reddit or Wikipedia, they’re better for finding other sources. It’s like a game of telephone, at the tertiary source you’re getting even less accurate information. It’s just oh so stupid the direction end users are herded towards.

AI scrapers don’t make donations

I use Gemini for my web searches now. But still donate to the WMF. In most cases I just want an answer with the least amount of bullshit. Even using Startpage to get Google results without Google bullshit, it’s annoying. My wife demands sources, and Gemini will provide those as well.

My view of the danger is that people are sourcing facts from AI instead of Wikipedia. Not worried about volunteers, they’re not going anywhere.

‘facts’

Both are not a source. Both can lead you to a real source.

They would be getting the same misinformation.

It’s been the goolge search results ai are mostly just ripping text from wikipedia

Well maybe if there wasn’t a huge banner bugging me for 3.50 like the lock ness monster at the top of every article people wouldn’t need to use LLMs to get the useful info /s

I don’t use AI at all

For me, it depends what year it is

What about non-human visitors?

Just make wikipedia full of AI content. (/s)

Pretty telling that AI will use most any shitty source besides one that is actually a pretty decent summary of sources.

Before I figured out how to turn it off DDG’s (DuckDuckGo) AI usually sourced Wikipedia if there was an article available.

I cannot read the article, but this seems like a non-issue.

Loads of old Wikipedia pages are essentially complete. Just freeze them.

And didn’t the Wikipedia foundation have years worth of funding already? And wouldn’t fewer visitors imply less need for server and bandwidth?

Current events need editors, and those will have controversy. I expect primary news sources would be better for anything less than a week old.

A Wikipedia that freezes at 2024 would still be of great value.

Clearly, I am missing the problem.

Old events are not frozen. There are these things called historians and archeologists who are, to this very day believe it or not, still researching “old” events and updating the facts as they find new sources or correct old ones.

Gotta drive those donations somehow