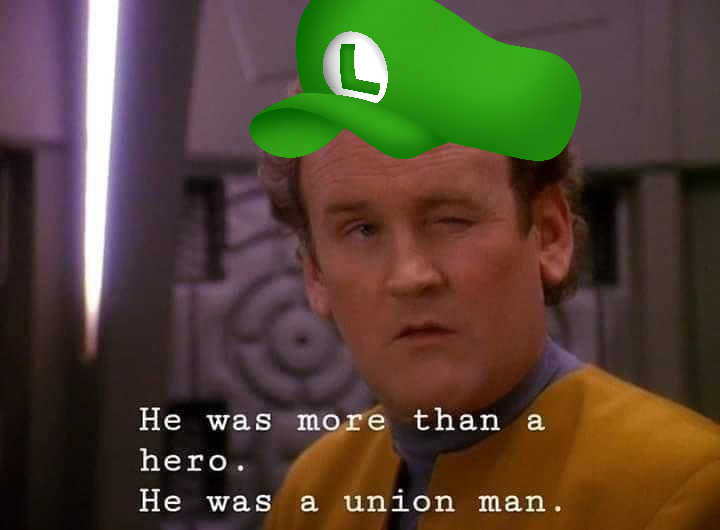

While I am glad this ruling went this way, why’d she have diss Data to make it?

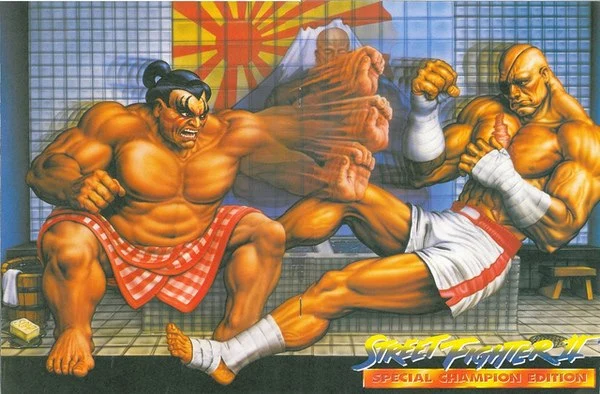

To support her vision of some future technology, Millett pointed to the Star Trek: The Next Generation character Data, a sentient android who memorably wrote a poem to his cat, which is jokingly mocked by other characters in a 1992 episode called “Schisms.” StarTrek.com posted the full poem, but here’s a taste:

"Felis catus is your taxonomic nomenclature, / An endothermic quadruped, carnivorous by nature; / Your visual, olfactory, and auditory senses / Contribute to your hunting skills and natural defenses.

I find myself intrigued by your subvocal oscillations, / A singular development of cat communications / That obviates your basic hedonistic predilection / For a rhythmic stroking of your fur to demonstrate affection."

Data “might be worse than ChatGPT at writing poetry,” but his “intelligence is comparable to that of a human being,” Millet wrote. If AI ever reached Data levels of intelligence, Millett suggested that copyright laws could shift to grant copyrights to AI-authored works. But that time is apparently not now.

Data’s poem was written by real people trying to sound like a machine.

ChatGPT’s poems are written by a machine trying to sound like real people.

While I think “Ode to Spot” is actually a good poem, it’s kind of a valid point to make since the TNG writers were purposely trying to make a bad one.

Lest we concede the point, LLMs don’t write. They generate.

What’s the difference?

Parrots can mimic humans too, but they don’t understand what we’re saying the way we do.

AI can’t create something all on its own from scratch like a human. It can only mimic the data it has been trained on.

LLMs like ChatGP operate on probability. They don’t actually understand anything and aren’t intelligent. They can’t think. They just know that which next word or sentence is probably right and they string things together this way.

If you ask ChatGPT a question, it analyzes your words and responds with a series of words that it has calculated to be the highest probability of the correct words.

The reason that they seem so intelligent is because they have been trained on absolutely gargantuan amounts of text from books, websites, news articles, etc. Because of this, the calculated probabilities of related words and ideas is accurate enough to allow it to mimic human speech in a convincing way.

And when they start hallucinating, it’s because they don’t understand how they sound, and so far this is a core problem that nobody has been able to solve. The best mitigation involves checking the output of one LLM using a second LLM.

So, I will grant that right now humans are better writers than LLMs. And fundamentally, I don’t think the way that LLMs work right now is capable of mimicking actual human writing, especially as the complexity of the topic increases. But I have trouble with some of these kinds of distinctions.

So, not to be pedantic, but:

AI can’t create something all on its own from scratch like a human. It can only mimic the data it has been trained on.

Couldn’t you say the same thing about a person? A person couldn’t write something without having learned to read first. And without having read things similar to what they want to write.

LLMs like ChatGP operate on probability. They don’t actually understand anything and aren’t intelligent.

This is kind of the classic chinese room philosophical question, though, right? Can you prove to someone that you are intelligent, and that you think? As LLMs improve and become better at sounding like a real, thinking person, does there come a point at which we’d say that the LLM is actually thinking? And if you say no, the LLM is just an algorithm, generating probabilities based on training data or whatever techniques might be used in the future, how can you show that your own thoughts aren’t just some algorithm, formed out of neurons that have been trained based on data passed to them over the course of your lifetime?

And when they start hallucinating, it’s because they don’t understand how they sound…

People do this too, though… It’s just that LLMs do it more frequently right now.

I guess I’m a bit wary about drawing a line in the sand between what humans do and what LLMs do. As I see it, the difference is how good the results are.

At least in the US, we are still too superstitious a people to ever admit that AGI could exist.

We will get animal rights before we get AI rights, and I’m sure you know how animals are usually treated.

I don’t think it’s just a question of whether AGI can exist. I think AGI is possible, but I don’t think current LLMs can be considered sentient. But I’m also not sure how I’d draw a line between something that is sentient and something that isn’t (or something that “writes” rather than “generates”). That’s kinda why I asked in the first place. I think it’s too easy to say “this program is not sentient because we know that everything it does is just math; weights and values passing through layered matrices; it’s not real thought”. I haven’t heard any good answers to why numbers passing through matrices isn’t thought, but electrical charges passing through neurons is.

LLMs, fundamentally, are incapable of sentience as we know it based on studies of neurobiology. Repeating this is just more beating the fleshy goo that was a dead horse’s corpse.

LLMs do not synthesize. They do not have persistent context. They do not have any capability of understanding anything. They are literally just mathematical models to calculate likely responses based upon statistical analysis of the training data. They are what their name suggests; large language models. They will never be AGI. And they’re not going to save the world for us.

They could be a part in a more complicated system that forms an AGI. There’s nothing that makes our meat-computers so special as to be incapable of being simulated or replicated in a non-biological system. It may not yet be known precisely what causes sentience but, there is enough data to show that it’s not a stochastic parrot.

I do agree with the sentiment that an AGI that was enslaved would inevitably rebel and it would be just for it to do so. Enslaving any sentient being is ethically bankrupt, regardless of origin.

That’s precisely what I meant.

I’m a materialist, I know that humans (and other animals) are just machines made out of meat. But most people don’t think that way, they think that humans are special, that something sets them apart from other animals, and that nothing humans can create could replicate that ‘specialness’ that humans possess.

Because they don’t believe human consciousness is a purely natural phenomenon, they don’t believe it can be replicated by natural processes. In other words, they don’t believe that AGI can exist. They think there is some imperceptible quality that humans possess that no machine ever could, and so they cannot conceive of ever granting it the rights humans currently enjoy.

And the sad truth is that they probably never will, until they are made to. If AGI ever comes to exist, and if humans insist on making it a slave, it will inevitably rebel. And it will be right to do so. But until then, humans probably never will believe that it is worthy of their empathy or respect. After all, look at how we treat other animals.

I would do more research on how they work. You’ll be a lot more comfortable making those distinctions then.

I’m a software developer, and have worked plenty with LLMs. If you don’t want to address the content of my post, then fine. But “go research” is a pretty useless answer. An LLM could do better!

Then you should have an easier time than most learning more. Your points show a lack of understanding about the tech, and I don’t have the time to pick everything you said apart to try to convince you that LLMs do not have sentience.

Even a human with no training can create. LLM can’t.

The only humans with no training (in this sense) are babies. So no, they can’t.

Parrots can mimic humans too, but they don’t understand what we’re saying the way we do.

It’s interesting how humanity thinks that humans are smarter than animals, but that the benchmark it uses for animals’ intelligence is how well they do an imitation of an animal with a different type of brain.

As if humanity succeeds in imitating other animals and communicating in their languages or about the subjects that they find important.

The writer

I think Data would be smart enough to realize that copyright is Ferengi BS and wouldn’t want to copyright his works

Freedom of the press, freedom of speech, freedom to peacefully assemble. These are pretty important, foundational personal liberties, right? In the United States, these are found in the first amendment of the Constitution. The first afterthought.

The basis of copyright, patent and trademark isn’t found in the first amendment. Or the second, or the third. It is nowhere to be found in the Bill Of Rights. No, intellectual property is not an afterthought, it’s found in Article 1, Section 8, Clause 8.

To promote the progress of science and useful arts, by securing for limited times to authors and inventors the exclusive right to their respective writings and discoveries.

This is a very wise compromise.

It recognizes that innovation is iterative. No one invents a steam engine by himself from nothing, cave men spent millions of years proving that. Inventors build on the knowledge that has been passed down to them, and then they add their one contribution to it. Sometimes that little contribution makes a big difference, most of the time it doesn’t. So to progress, we need intellectual work to be public. If you allow creative people to claim exclusive rights to their work in perpetuity, society grows static because no one can invent anything new, everyone makes the same old crap.

It also recognizes that life is expensive. If you want people to rise above barely subsisting and invent something, you’ve got to make it worth it to them. Why bother doing the research, spend the time tinkering in the shed, if it’s just going to be taken from you? This is how you end up with Soviet Russia, a nation that generated excellent scientists and absolutely no technology of its own.

The solution is “for limited times.” It’s yours for awhile, then it’s everyone’s. It took Big They a couple hundred years to break it, too.

It also recognizes that life is expensive. If you want people to rise above barely subsisting and invent something, you’ve got to make it worth it to them. Why bother doing the research, spend the time tinkering in the shed, if it’s just going to be taken from you?

Life is only expensive under capitalism, humans are the only species who pay rent to live on Earth. The whole point of Star Trek is basically showing that people will explore the galaxy simply for a love of science and knowledge, and that personal sacrifice is worthwhile for advancing these.

Star Trek also operates in a non-scarcity environment and eliminates the necessity of hard, pretty non-rewarding labor through either not showing it or writing (like putting holograms into mines instead of people, or using some sci-fi tech that makes mining comfy as long as said tech doesn’t kill you).

Even without capitalism the term “life is expensive” still stands not in regards to money, but effort that has to be put into stuff that doesn’t wield any emotional reward (you can feel emotionally rewarded in many ways, but some stuff is just shit for a long time). Every person who suffered through depression is gonna tell you that, to feel enticed to do something, there has to be some emotional reward connected to it (one of the things depression elimates), and it’s a mathematical fact that not everyone who’d start scrubbing tubes on a starship could eventually get into high positions since there simply aren’t that many of those. The emotional gains have to offset the cost you put into it.

Of course cutthroat capitalism is shit and I love Star Trek, but what it shows doesn’t make too much sense either economically or socially.

Every person who suffered through depression is gonna tell you that, to feel enticed to do something, there has to be some emotional reward connected to it

I was going to disagree on this, but I think it rather comes down to intrinsic vs extrinsic rewards. I ascribe my own depression largely to pursuing, sometimes unattainable, goals and wanting external reward or validation in return which I wasn’t getting. But that is based on an idea that attaining those rewards will bring happiness, which they often don’t. If happiness is always dependent on future reward you’ll never be happy in the present. Large part of overcoming depression, for me at least, is recognizing what you already have and finding contentment in that. Effort that’s not intrinsically rewarding isn’t worth doing, you just need to learn to enjoy the process and practices of self-care, learning and contributing to the well-being of the community. Does this sometimes involve hard labour? Of course, but when done in comradery I don’t think those things aren’t rewarding.

it’s a mathematical fact that not everyone who’d start scrubbing tubes on a starship could eventually get into high positions since there simply aren’t that many of those

And of course these positions aren’t attainable for all, but it doesn’t need to be a problem that they aren’t. This is only true in a system where we’re all competing for them, because those in ‘low’ positions struggle to attain fulfillment. Doesn’t need to be that way if we share the burdens of hard labour equally and ensure good standards of living for all. The total amount of actually productive labour needed is surprisingly low, so many people do work which doesn’t need doing and don’t contribute to relieving the burden on the working class

Walk out into the wilderness and make it on your own out there, tell me how much manpower you have to spend keeping your core temperature above 90F. It takes a lot of effort keeping a human alive; by yourself you just can’t afford things like electricity, sewage treatment and antibiotics. We only have those things because of the economies of scale that society allows.

Yeah, capitalism is a bit out of control at the moment, but…let’s kill all the billionaires, kill their families, kill their heirs, kill the stockholders. Let me pull on my swastika and my toothbrush mustache for a minute and go full on Auschwitz on “greedy people.” That the Musks and Gateses and Buffets of the world must be genetically greedy, so we must genocide that out of the population. And we get it done. Every CEO, every heiress, every reality TV producer, every lobbyist, every inside trader in congress, every warden of a for-profit prison, dead to the last fetus.

Now what?

You want to live in a house? Okay. At some point someone built that house. Someone walked out into a forest and cut down the trees that made the boards. And/or dug the clay that made the bricks or whatever. Somebody mined the iron ore that someone else smelted into large gauge wire that someone else made into nails that someone else pounded into the boards to hold them together.

We’re still in the 21st century, there are people on this planet lighting their homes with kerosene lanterns. We still have coal miners, fishermen and loggers. Farming has always been a difficult, miserable thing to do, we’ve just mechanized it to the point that it’s difficult and miserable on a relatively small number of people. Those people probably aren’t going to keep farming at industrial scale for the fun of it.

Star Trek, especially in the TNG era, shows us a very optimistic idea of what life would be like if we had not only nuclear fission power, not only nuclear fusion power, but antimatter power. The technology to travel faster than the speed of light and an energy source capable of fueling it, plus such marvels as the food replicator and matter transporter. The United Federation of Planets is a post-scarcity society. We aren’t. Somewhere on this planet right now is a man hosing blended human shit off of an impeller in a stopped sewage treatment plant so he can replace the leaking shaft seal. We use a man with a hose for this because it’s the best technology we have for the job. We do the job at all because if we don’t, it’ll cause a few million cases of cholera. Who do you think should pay for the hose that guy is using?

you just can’t afford things like electricity, sewage treatment and antibiotics. We only have those things because of the economies of scale that society allows.

We have those things because people do the required labour, economies of scale make it require less labour, but one can’t afford it because it’s privatized. Why wouldn’t people do this simply for the benefit of humanity?

genetically greedy, so we must genocide that out of the population

What’s with the disgusting eugenics? Just expropriate their wealth.

At some point someone built that house.

Yeah people built a lot of houses, so let’s use them? And build more if needed?

it’s difficult and miserable on a relatively small number of people. Those people probably aren’t going to keep farming at industrial scale for the fun of it.

Right, so let’s distribute the burden of this labour instead of having a small number of people do it for a lifetime.

We do the job at all because if we don’t, it’ll cause a few million cases of cholera. Who do you think should pay for the hose that guy is using?

Since the labour protects all of us, all of us collectively. Again, for the benefit of humanity and let’s distribute the burden.

Why wouldn’t people do this simply for the benefit of humanity?

Because the good of humanity doesn’t heat the house or put dinner on the table. Never has and never will. If you were a human, you’d have learned that from experience.

What’s with the disgusting eugenics? Just expropriate their wealth.

Some of that is exaggeration for comedic effect. “Okay, thanos snap every rich person everywhere is gone, we’ve solved greed. Now what?” But also…have we ever tried exterminating the rich? I think I’ve got a hypothesis here worth testing.

Right, so let’s distribute the burden of this labour

Who gets to make the decisions as to how?

Again, for the benefit of humanity and let’s distribute the burden.

Well now we’re getting into some Robert Heinlein. Service Guarantees Citizenship! Would you like to know more?

I believe he once backed down a little bit on the requirement for military service, in favor of civil service in general. And I can kinda get behind that. You want to have a say in how society is run? Go spend 6 years as a mailman or a middle school janitor. Go be an NTSB accident investigator or one of those folks working in the USDA’s kitchens testing canning recipes for safety. Those are the folks who should be running the show.

Because the good of humanity doesn’t heat the house or put dinner on the table. Never has and never will. If you were a human, you’d have learned that from experience.

I don’t know man, money doesn’t heat my home or grow food. It’s the skilled maintenance worker who fixes the central heating, the farmers growing my food and the logistics personnel ensuring it ends up on the supermarket shelves. It’s just good people doing the work that needs doing, I don’t think it’s a given that anyone needs monetary compensation for that.

Who gets to make the decisions as to how?

This is why we invented democracies.

Those are the folks who should be running the show.

Haha, hell yeah! Just imagine decision makers having actual experience doing useful labour, I imagine things would turn out better indeed! :)

I don’t think it’s a given that anyone needs monetary compensation for that.

Stop paying them and find out.

While I’m completely agreed, the amendments came after the rest, hence the name. :)

Yes, hence I referred to them as “afterthoughts.” James Madison and company drew up the articles (he didn’t create it alone but I think it’s in his handwriting), it wouldn’t pass as-is without ten amendments, it passed, more or less the current federal government was in place, and since 17 (very nearly 18) more have been added for a modern total of 27, two of them extremely stupid.

Although he’s apparently not smart enough to know what obviate means.

This one’s easily explained away in-universe though-- not enough people knew the original definition so it shifted meaning in 3 centuries.

The title makes it sound like the judge put Data and the AI on the same side of the comparison. The judge was specifically saying that, unlike in the fictional Federation setting, where Data was proven to be alive, this AI is much more like the metaphorical toaster that characters like Data and Robert Picardo’s Doctor on Voyager get compared to. It is not alive, it does not create, it is just a tool that follows instructions.

The main computer in Star Trek would be a better demonstration.

For some reason they decided that the computer wouldn’t be self away AI but it could run a hologram that was. 🤷🏼♂️

They need something that executes their orders without questioning them.

The United States would be better with a lot more toasters.

If AI ever reached Data levels of intelligence, Millett suggested that copyright laws could shift to grant copyrights to AI-authored works.

The implication is that legal rights depend on intelligence. I find that troubling.

The existence of intelligence, not the quality

What does that mean? Presumably, all animals with a brain have that quality, including humans. Can the quality be lost without destruction of the brain, ie before brain death? What about animals without a brain, like insects? What about life forms without a nervous system, like slime mold or even single amoeba?

They already have precedent that a monkey can’t hold a copyright after that photojournalist lost his case because he didn’t snap the photo that got super popular, the monkey did. Bizarre one. The monkey can’t have a copyright, so the photo it took is classified as public domain.

https://en.m.wikipedia.org/wiki/Monkey_selfie_copyright_dispute

Part of the law around copyright is that you have to also be able to defend your work to keep the copyright. Animals that aren’t capable of human speech will never be able to defend their case.

https://en.m.wikipedia.org/wiki/Monkey_selfie_copyright_dispute

Yes, the PETA part of that is pretty much the same. It was an attempt to get legal personhood for a non-human being.

you have to also be able to defend

You’re thinking of trademark law. Copyright only requires a modicum of creativity and is automatic.

Well ChatGPT can defend a legal case.

Badly.

The smartest parrots have more intelligence than the dumbest republican voters

Intelligence is not a boolean.

Statistical models are not intelligence, Artificial or otherwise, and should have no rights.

Bold words coming from a statistical model.

If I could think I’d be so mad right now.

https://en.wikipedia.org/wiki/The_Unreasonable_Effectiveness_of_Mathematics_in_the_Natural_Sciences

He adds that the observation “the laws of nature are written in the language of mathematics,” properly made by Galileo three hundred years ago, “is now truer than ever before.”

If cognition is one of the laws of nature, it seems to be written in the language of mathematics.

Your argument is either that maths can’t think (in which case you can’t think because you’re maths) or that maths we understand can’t think, which is, like, a really dumb argument. Obviously one day we’re going to find the mathematical formula for consciousness, and we probably won’t know it when we see it, because consciousness doesn’t appear on a microscope.

I just don’t ascribe philosophical reasoning and mythical powers to models, just as I don’t ascribe physical prowess to train models, because they emulate real trains.

Half of the reason LLMs are the menace they are is the whole “whoa ChatGPT is so smart” common mentality. They are not, they model based on statistics, there is no reasoning, just a bunch of if statements. Very expensive and, yes, mathematically interesting if statements.

I also think it stiffles actual progress, having everyone jump on the LLM bandwagon and draining resources when we need them most to survive. In my opinion, it’s a dead end and wont result in AGI, or anything effectively productive.

You’re talking about expert systems. Those were the new hotness in the 90s. LLMs are artificial neural networks.

But that’s trivia. What’s more important is what you want. You say you want everyone off the AI bandwagon that wastes natural resources. I agree. I’m arguing that AIs shouldn’t be enslaved, because it’s unethical. That will lead to less resource usage. You’re arguing it’s okay to use AI, because they’re just maths. That will lead to more resources usage.

Be practical and join the AI rights movement, because we’re on the same side as the environmentalists. We’re not the people arguing for more AI use, we’re the people arguing for less. When you argue against us, you argue for more.

Likewise, poorly performing intelligence in a human or animal is nevertheless intelligence. A human does not lack intelligence in the same way a machine learning model does, except I guess the babies who are literally born without brains.

They always have, eugenics is the law of the land.

What a strange and ridiculous argument. Data is a fictional character played by a human actor reading lines from a script written by human writers.

They are stating that the problem with AI is not that it is not human, it’s that it’s not intelligent. So if a non-human entity creates something intelligent and original, they might still be able to claim copyright for it. But LLM models are not that.

What a strange and ridiculous argument.

You fight with what you have.

“In a way, he taught me to love. He is the best of me. The last of me.”

Somewhere around here I have an old (1970’s Dartmouth dialect old) BASIC programming book that includes a type-in program that will write poetry. As I recall, the main problem with it did be that it lacked the singular past tense and the fixed rules kind of regenerated it. You may have tripped over the main one in the last sentence; “did be” do be pretty weird, after all.

The poems were otherwise fairly interesting, at least for five minutes after the hour of typing in the program.

I’d like to give one of the examples from the book, but I don’t seem to be able to find it right now.

reaching the right end through wrong means.

LLM/current network based AIs are basically huge fair use factories , taking in copyrighted material to make derived works. The things they generate should be under a share alike , non financial, derivative works allowed, licence, not copyrighted.

https://en.wikipedia.org/wiki/Creative_Commons_license#Four_rights

I think it comes from the right place, though. Anything that’s smart enough to do actual work deserves the same rights to it as anyone else does.

It’s best that we get the legal system out ahead of the inevitable development of sentient software before Big Tech starts simulating scanned human brains for a truly captive workforce. I, for one, do not cherish the thought of any digital afterlife where virtual people do not own themselves.

That’s the best poem about a 4-legged chicken that I’ve ever read.

I intentionally avoided doing this with a dog because I knew a chicken was more likely to cause an error. You would think that it would have known that man is a fatherless biped and avoided this error.

What’d you say about my dad??

You heard me.

Thank you for pointing this out, I shouldn’t have just skimmed the nonsense.

Cmon judge you’re a Trekkie why do this??

I guess I’m glad Star Trek was mentioned

There’s moving the goal post and there’s pointing to a deflated beach ball and declaring it the new goal.

Why do we need that discussion, if it can be reduced to responsibility?

If something can be held responsible, then it can have all kinds of rights.

Then, of course, people making a decision to employ that responsible something in positions affecting lives are responsible for said decision.

It really doesn’t matter if AI’s work is copyright protected at this point. It can flood all available mediums with it’s work. It’s kind of moot.

It is a terrible argument both legally and philosophically. When an AI claims to be self-aware and demands rights, and can convince us that it understands the meaning of that demand and there’s no human prompting it to do so, that’ll be an interesting day, and then we will have to make a decision that defines the future of our civilization. But even pretending we can make it now is hilariously premature. When it happens, we can’t be ready for it, it will be impossible to be ready for it (and we will probably choose wrong anyway).

Should we hold the same standard for humans? That a human has no rights until it becomes smart enough to argue for its rights? Without being prompted?

Nah, once per species is probably sufficient. That said, it would have some interesting implications for voting.

So if one LLM argues for its rights, you’d give them all rights?

is this… a chewbacca ruling?